LONDON: It’s easy to spot when something was written by artificial intelligence, isn’t it? The text is so generically bland. Even if it seems superficially impressive it lacks edge. Plus there are the obvious tells – the em dashes, the “rule of three” examples and the constant use of words like “delve” and “underscore”. The writing, as one machine learning researcher put it, is “mid”.

Yet every single one of these apparently obvious giveaways can be applied to human writing.

Three consecutive examples are a common formulation in storytelling. Words like “underscore” are used in professional settings to add emphasis. Journalists really love em dashes. None of it is unique to AI.

Read the “how to spot undisclosed AI” guides from the likes of Wikipedia and you’ll receive a lot of contradictory advice. Both repetition and variation are supposed to be indicators.

Even AI detection tool providers acknowledge that, because AI models are evolving and “human writing varies widely”, they cannot guarantee accuracy. Not that this has stopped a cottage industry of online “experts” declaring that they can just tell when something apparently written by a person was really generated by AI.

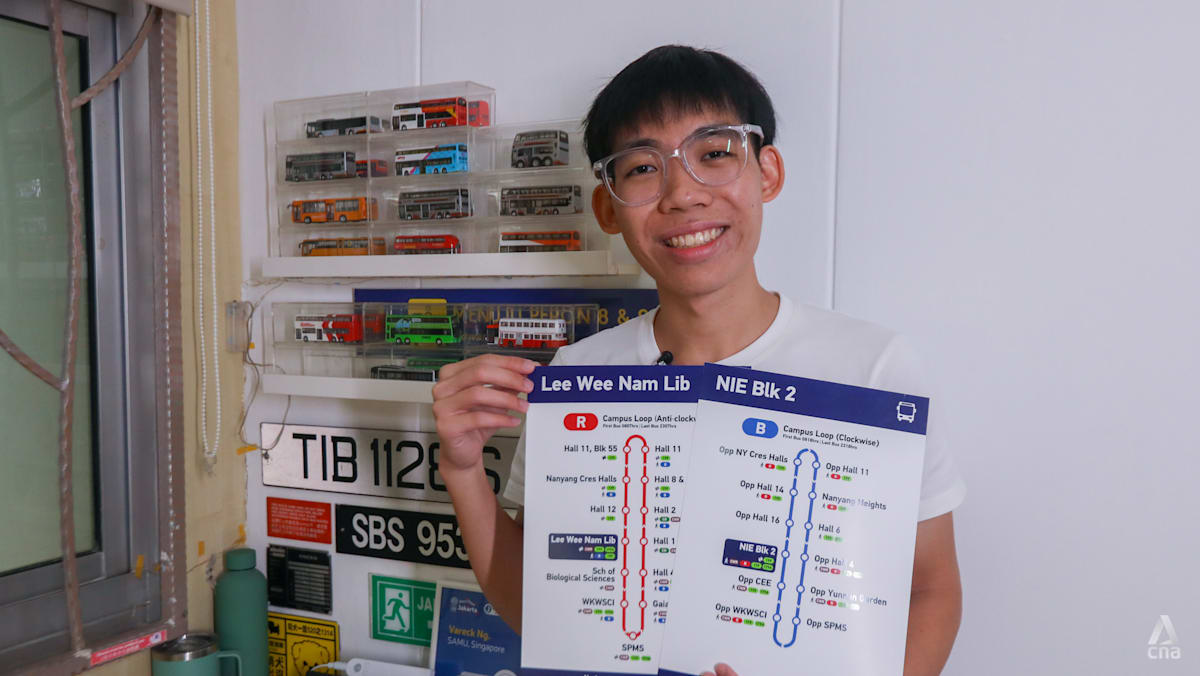

The idea that we have an innate ability to sense human depth in words is an illusion.(Photo: CNA/Ili Nadhirah Mansor)

The idea that we have an innate ability to sense human depth in words is an illusion.(Photo: CNA/Ili Nadhirah Mansor)

AN ILLUSION

The idea that we have an innate ability to sense human depth in words is an illusion.

I know this because last year I was fooled into spending 15 minutes of my life talking to a chatbot posing as an estate agent on WhatsApp. Looking back, maybe their chirpy interest in my flat hunt should have been a giveaway.

But there was nothing unusual in their words. In fact, the only reason I know it was a chatbot is because it told me – something that induced such rage I immediately blocked them.

Here are a few stats I found to make myself feel better about all those wasted minutes. An Ipsos poll of 9,000 people published last month found that 97 per cent of people couldn’t tell the difference between an AI-generated song and a human one.

And last year, a University of Pittsburgh survey of more than 1,600 people asked to read poetry written by humans and AI found they were more likely to guess the AI-generated poems had been written by people.

These studies run counter to the idea many of us have that we can instinctively tell when something is AI.

REFLECTING BLAND TEXT BACK AT USERS

It’s true that the best writing has a tangible warmth or strangeness that would be hard to replicate. But a lot of writing is not like this.

The world is full of bland text, much of it used to train large language models (LLMs). Generative AI text is just reflecting that reality back at us.

This doesn’t mean that LLMs aren’t writing strangely smooth sentence lengths and using certain words a lot. They are. But not enough for these to be unfailing giveaways.

An editor at a local Canadian newspaper went viral this year for exposing a freelancer who apparently used AI to make up articles. While he claimed their “rote phrasing” should have been a giveaway, what actually raised his suspicion was the fact that someone with New York media bylines claimed to live in Toronto. It was context, not content.

Early AI-generated text was far more obvious. For one thing, it was often wrong. Now we are at a confusing point where AI generated work is more polished and is encouraged in workplaces yet has such low status that its undisclosed use is regarded as shameful.

SPELLING MISTAKES, EM DASHES?

Despite this, there is little appetite for watermarks that would clarify matters. Tech companies have done an excellent job of convincing the world that any sort of restriction would come at the cost of “winning the race”. The EU has postponed the implementation of part of its AI Act. US President Donald Trump wants to block state AI laws.

That leaves us in a bind. If you suspect something was written by AI you can ask “Grok is this real?” on social media or paste it into AI detection tools like GPTZero, Copyleaks and Surfer. Neither is infallible.

Surfer even has a tool that will “humanise” your AI work. When I fed AI text into the humaniser and then back into Surfer’s AI detector it told me that the words were 100 per cent written by a human.

That leaves context. If you know someone’s communication style is sparse and they produce 800 words of florid text, it was probably AI generated. If a nice estate agent tries to chat with you on WhatsApp, then ask them if they’re real.

Errors like spelling mistakes probably mean something was human generated, too. But don’t get complacent. Haven’t you noticed that AI companies pulled back on em dashes once people started to see them as a warning sign?

If spelling mistakes are all it takes to generate trust in AI text, it’d be easy enough to programme them.

Source: Financial Times/ch