SINGAPORE: More than 900 inauthentic Facebook accounts posting over 5,000 comments were detected in a roughly one-day period after the government first alerted the public to foreign posts on religion and politics, recent checks by CNA have found.

CNA’s latest analysis comes after it previously reported on Apr 24 that hundreds of fake Facebook accounts and bots were spreading anti-People’s Action Party (PAP) and anti-Workers’ Party (WP) sentiments.

CNA conducted another round of checks after it detected a hive of social media activity around news posts on race and politics, following the government’s announcement at 10pm on Apr 25 that foreigners had posted online election advertisements urging Singaporeans to vote along religious lines.

The government had directed Meta to block Singapore users’ access to several posts made by two Malaysian politicians, as well as a former Internal Security Act (ISA) detainee.

For the latest analysis, CNA looked at 35 Facebook posts uploaded between late Apr 25 and early Apr 27 related to the content about these news developments.

These include three from Islamic religious teacher Noor Deros, one from "Zai Nal" - belonging to the former ISA detainee - and one from the WP. The remainder are posts by news outlets CNA (12), Mothership (10) and The Straits Times (eight).

These posts involve responses or reactions to the government's move, as well as the news outlets’ reporting of comments by Prime Minister Lawrence Wong and WP chief Pritam Singh on the foreign interference.

CNA then scanned 8,991 comments made by 2,915 unique Facebook accounts on these posts.

Of the 8,991 comments made, 5,201 - or 58 per cent - were deemed to be from inauthentic accounts, meaning they were fake or suspected to be fake.

A total of 911 out of the 2,915 accounts that posted the comments, or 31 per cent, were identified as inauthentic.

Another 1,427 accounts - or 49 per cent - were flagged as real accounts, while the authenticity of the remaining 577 accounts could not be determined.

The overall data suggest the fake accounts are part of a coordinated inauthentic campaign, with synchronised comment bursts, copy-pasted messages across accounts, and a tightly interconnected network of fake personas.

Fifteen of the 911 inauthentic accounts detected posted between 30 to more than 70 comments each, while around 290 of them posted only a single comment.

This suggests a mix of roles: A core group of active operators and many auxiliary accounts likely created to lend additional voices in one or two comment threads.

Slightly over one-quarter (26.9 per cent) of the 5,201 inauthentic comments were classified as anti-opposition attacks, followed by religious and political controversy (23.9 per cent), and anti-establishment and conspiracy (19.1 per cent).

The remaining comments included themes like racial and minority issues as well as calls for religious neutrality.

An inauthentic comment only means that the comment was made by a fake account. It does not immediately mean that the content of these comments are false, though further analysis of the content shows half-truths and misinformation being used in some cases.

CNA is unable to determine where these fake accounts originate from, as users can easily lie during account registration or spoof their locations through various methods.

Categories of fake comments

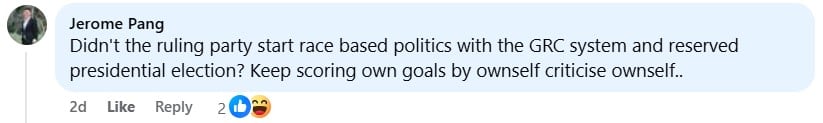

Anti-opposition attacks

Many bots directly attacked WP figures or supporters with derogatory language.

These comments often used insults to demean and discredit.

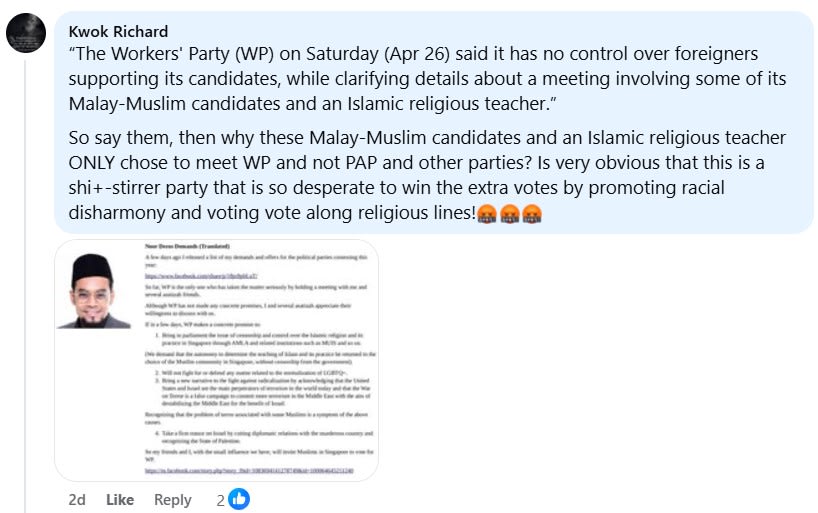

Religious and political controversy

Comments in this category linked religion to politics and typically also took on a partisan nature, either by attacking WP for mixing religion with politics, or accusing the PAP of using this issue to smear the opposition.

For example, one coordinated message spread by multiple accounts said WP was being desperate to win votes by promoting religious disharmony.

On the other hand, others said the PAP was trying to bring religious issues into the election.

Anti-establishment and conspiracy

The criticisms against the PAP generally accuse the ruling party of hypocrisy, with some saying it is the one playing the race card.

Also in the mix are conspiracy themes, with bot accounts suggesting that China or Malaysia may be behind the attempts to influence the election.

Racial and minority issues

Posts in this category appear to be aimed at sowing suspicion or eroding trust between races.

For example, some fake accounts would try to create resentment among Malay voters by suggesting they were being used by either party.

Calls for religious neutrality

In this category, bots try to push or repeat messages about the dangers of mixing religion with politics.

While the comments may be seen as trying to urge caution, the fact that these were posted by fake accounts suggests the bots may be attempting to reinforce establishment messages.

For example, one comment said: “like what the article say ‘We must not mix religion and politics. Singapore is a secular state. Our institutions serve Singaporeans equally. Bringing religion into politics will undermine social cohesion and harmony, as we have seen in other countries with race- or religion-based politics.’”

Collapse Expand

BOTS TARGETED CNA THE MOST

Telltale signs of inauthentic accounts include being recently created, having few connections, and posting mainly on a single type of content, such as those that attack a political party and its candidates.

They also tend to be locked and lack any original posts, such as photos of someone’s day.

Bots, on the other hand, are multiple fake accounts managed by individuals, which also suggest a more coordinated attempt to spread a certain messaging on the platform.

They are also known to make repeated comments or replies, similar to techniques used by spammers. Sometimes, multiple fake accounts end up posting similar comments, albeit with slight variations to avoid detection.

Both types of behaviour run afoul of Facebook’s community standards.

According to Facebook’s website, the platform also requires users to provide real names on profiles to ensure they always know who they are connecting with. “This helps keep our community safe,” its name and birthday policy states.

The latest analysis indicated that CNA’s posts were the most targeted by the bot army, with 2,578 inauthentic comments on the 12 CNA Facebook posts. This accounted for nearly half the 5,201 inauthentic comments.

The most targeted post - attracting 612 inauthentic comments - was its post on WP's initial statement on the foreign interference. CNA’s post was uploaded at 9.38am on Apr 26.

An example of a partisan comment made by an inauthentic account on CNA's Facebook post on WP's initial statement on the foreign interference.

An example of a partisan comment made by an inauthentic account on CNA's Facebook post on WP's initial statement on the foreign interference.

The second most targeted post was also by CNA, with 436 inauthentic comments on the link to its article taking a closer look at who Mr Noor is.

An example of a partisan comment made by an inauthentic account on CNA's Facebook post on Islamic religious teacher Noor Deros.

An example of a partisan comment made by an inauthentic account on CNA's Facebook post on Islamic religious teacher Noor Deros.

The Straits Times was the second most targeted with 1,440 inauthentic comments on its posts, while Mothership ranked third with 998 inauthentic comments on its posts.

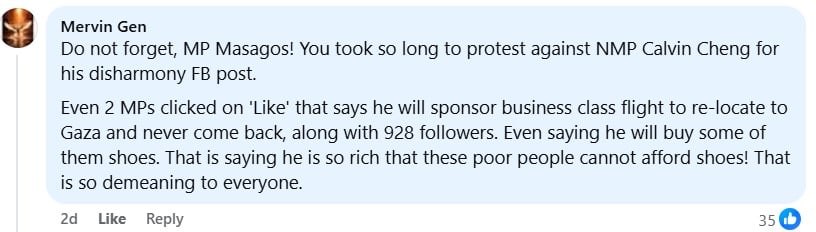

An example of a partisan comment made by an inauthentic account on Mothership's Facebook post on Minister-in-charge of Muslim Affairs Masagos Zulkifli's comments.

An example of a partisan comment made by an inauthentic account on Mothership's Facebook post on Minister-in-charge of Muslim Affairs Masagos Zulkifli's comments.

While posts by news outlets bore the brunt of bot-like campaigns, there was low bot presence detected on related posts by the WP and also by Mr Noor, which drew just 90 and 91 inauthentic comments, respectively.

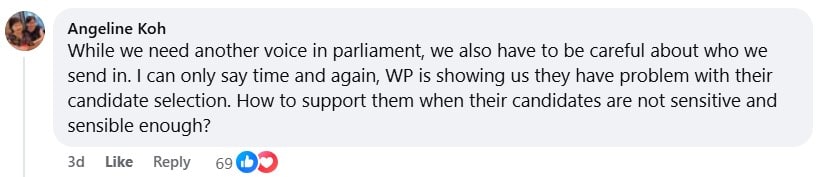

An example of a partisan comment made by an inauthentic account on CNA's Facebook post on WP's initial statement on the foreign interference.

An example of a partisan comment made by an inauthentic account on CNA's Facebook post on WP's initial statement on the foreign interference.

This is indicative of how bot campaigns typically work: Bots are not interested in preaching to the choir.

HIGHLY SYNCHRONISED POSTING OF COMMENTS

Posting of the comments was found to be highly synchronised, particularly between 10am and 3pm on Apr 26, when the bot accounts as a whole were found to have posted comments most frequently.

It was common to see many different fake accounts commenting within minutes of each other on the same post right after it went up.

For example, a 10-minute window of around 2.35pm to 2.45pm on Apr 26 saw 90 distinct fake accounts post comments.

There was also a burst of 157 inauthentic comments from 10pm to 11pm on Apr 25, right when news of the government’s statement broke.

The bot tactics here reflect attempts to align with local audience attention, and to influence public opinion overnight through setting narratives by morning, and then reinforcing them during the day.

Analysis of the timings of the comments revealed orchestrated burst patterns. The bot network could mobilise at least 10 or more distinct accounts within a 10-minute window repeatedly.

TOP BOT AND SUPERSPREADERS

The top bot detected in this campaign is Facebook user “Angeline Tan”, who posted 71 times in a four-and-a-half hour span between 8.19pm on Apr 26 and 12.54am on Apr 27.

The user’s 71 comments were posted on just two CNA posts uploaded on Apr 26 - a video post of Mr Wong’s comments during a press conference on the foreign interference, and another earlier video post of Mr Singh’s comments to the media on the same matter.

A handful of inauthentic accounts were also identified as superspreaders because they appeared to comment on each of these posts, and their Facebook connections show that they are affiliated to a large number of suspicious accounts that also posted similar messages, also known as “co-commenting”.

This could mean that they may be the main operators of these bot networks, or that automated bots take the cue from them.

The top five accounts based on the number of unique accounts they co-commented with are “Low SiewFai”, “Maetiara Ismail”, “PS FU”, “Vincent Tang” and “Dunkie Rookie”, all of which are connected to at least 700 other inauthentic accounts.

These accounts each engaged in between 10 and 16 targeted comment threads, ensuring that the narratives permeated multiple audiences.

Low SiewFai, for instance, connected to 776 other suspect accounts and was active in at least 16 different posts, with 26 comments. The user’s comments were nearly identical.

CNA’s checks also found at least 179 distinct comments that were repeated word for word by multiple accounts. Many appear to be pre-written scripts or talking points injected into discussions.

One example, posted 43 times, is: “Details of the earlier conversation between WP and the former religious teacher - available here for those interested:”.

Another example, posted 38 times, is: “Full video of the conversation between Mr. Noor Deros and #WP available here for those interested:”.

Both examples were appended with spam links.

The Ministry of Digital Development and Information has earlier said it was looking into the fake accounts, in response to CNA’s report on Apr 24.

“We will continue to monitor the online space for foreign interference and will act on content that is in breach of our laws. We urge the public to be discerning consumers of information,” it said.

CNA had also reached out to Facebook parent company Meta, which has not responded so far.

.png?itok=erLSagvf)